In-memory Computing, Rich Datasets Are Transforming Precision Medicine

By Dr. David Delaney, Chief Medical Officer, Healthcare at SAP

Next generation precision medicine — powered by broader, richer datasets and high performance, in-memory computing capabilities — is facilitating healthcare transformation by leveraging data no matter where it lives and no matter what form it is in to drive better, more personalized decisions for patients. This includes leveraging both existing sources of data such as clinical, financial, and claims data as well as emerging sources of data including panomic (such as epigenomic, genomic, transcriptome, proteome, and metabalome) and growing sources of additional data available (pollen counts, wind pattern, air quality, etc.) via public Application Program Interfaces (API).

Medicine has traditionally been a chimera of art and science, relying on an uneasy blend of evidence-based medicine, traditional treatment modalities with little supporting data, and simple pattern recognition extrapolating from cases a practitioner identifies as similar based on gut and intuition. In the past, that was the best we could do. But now, we have the opportunity to utilize richer and broader datasets with in-memory computing capabilities to leverage the collective experience of entire organizations, systems, regions, countries or event globally to supercharge practitioner’s decision-maker’s power based on a far broader group of patients, more similarly matched than even before. In short, we can and have to do better in moving the needle, whenever possible, from art toward science and data-driven decision-making.

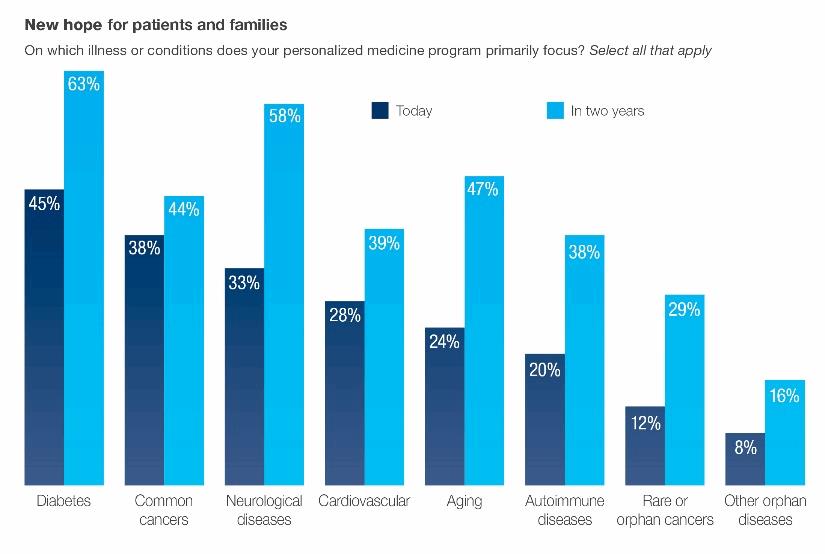

The potential positive impact to the health system of leveraging precision medicine is staggering — the move from a one-size-fits-all model to personalized medicine is estimated to reduce health costs by up to $750 billion in the United States alone. More importantly, precision medicine promises to improve patient outcomes in the most devastating, far-reaching diseases while reducing costs (Figure 1). Truly, a win-win scenario.

And this revolution has already started — among healthcare professionals surveyed in an Oxford Economics study, a large majority (two-thirds) say personalized medicine is having a measurable effect on patient outcomes. Doctors are prescribing the more optimal dosages based on patients’ metabolic rates and defining treatments according to a patient’s genetic and genomic information, age, and personal habits. Tailored treatment plans also reduce wasted resources and expenses while allowing physicians to serve more patients. Additionally, providers are leveraging the collective wisdom of their organizations to provide more nuanced decision-making based on cohorts of similar patients who have already passed through the system.

Looking at early outcomes, precision medicine and more optimally leveraging both existing and novel datasets are starting to have a significant impact on both patients and healthcare professionals. There are real-world examples that show how the in-memory computing revolution is propelling precision medicine forward and changing how we diagnose, treat, and prevent diseases.

CancerLinQ Harnesses Big Data

CancerLinQ, an initiative launched by the American Society of Clinical Oncologists (ASCO), puts Big Data to work analyzing millions of cancer patient’s medical records to uncover patterns and trends, as well as measure their care against that of their peers and recommended guidelines. It’s surprising to many patients and families, but the data oncologists leverage for deciding on chemotherapeutic regimens are based on a tiny subset (only 3 percent) of clinical trial patients. This might be okay if the enrolled patients represented the general population of cancer patients well, however real-world cancer patients tend to be older, sicker, and more ethnically diverse than the typical study patients who tend to be “fit except for the cancer.”

Oftentimes, the available clinical trials would have excluded patients like the one the oncologist is treating, forcing extrapolation and less certain decision-making. CancerLinQ unlocks the knowledge and value from the 97 percent of cancer patients not involved in clinical trials to help deliver better, more data-driven decision-making based on real-world results of patients closely matched to the patient at hand.

The 58 vanguard practices are now up and using CancerLinQ with 750,000 cancer patient records stored in the system. Approximately 200 additional practices are in line to be added to CancerLinQ. Based on the interest of the 40,000 oncologists who are ASCO member, this is only the start to an amazing journey.

Oncologists soon will have vast amounts of usable, searchable, real-world cancer information to help them provide better quality assessments, care coordination, case management, and other healthcare operations activities.

National Cancer Institute’s Genomic Data Commons

Another great example of Big Data analytics is the National Cancer Institute’s Genomic Data Commons, a unique public data platform for storing, analyzing, and sharing genomic and associated clinical data on cancer. Researchers are uploading tumor genomes, which is the DNA unique to cancer cells, and will be able to share their data and collaborate on cancer prevention, diagnosis, and therapies. The GDC will represent data from 10,000 cancer patients and their tumors.

The GDC is crowdsourcing rich data for cancer researchers and providing a powerful technology platform that is interactive and easily searchable. Researchers can use the GDC as a test bed to accelerate the quest for new approaches to treat and prevent cancer.

Multi-hospital Data Sharing Advances Disease Management

Hospitals are realizing both better patient outcomes and cost savings with Big Data. Partners Health, a 12-hospital system in Massachusetts, is a leader in precision medicine and employees a 10,000-person IT team. Clinicians have a research portal where they can analyze patient samples from an in-house biobank, and they can aggregate patient data from multiple hospitals.

The organization is applying Big Data techniques to understand the costs of diabetes management and heart failure and define more cost-effective strategies. In tandem, Partners Health has a massive transition to a new electronic medical records system underway that will support better information sharing among hospitals. It’s too early to assess the savings, but business and tech expectations are high.

Defining Better Clinical Guidelines With Predictive Analytics

Improving patient outcomes is an ongoing theme in precision care and there’s considerable room for improvement around implementing consistent processes. I’m often asked what healthcare can learn from other industries supported by SAP and manufacturing has some good takeaways. Even though healthcare is significantly more complex than manufacturing, both industries face extensive costs as a result of mistakes and benefit tremendously from continuous process improvement.

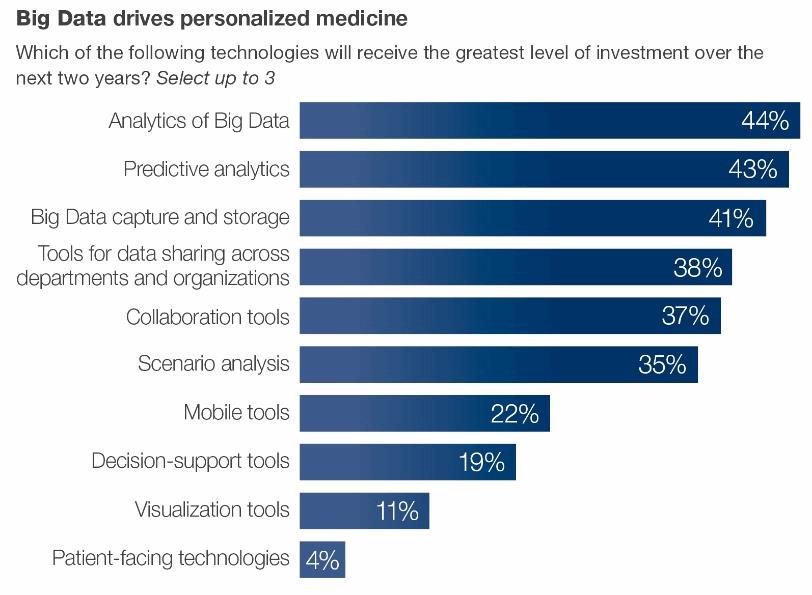

Big Data and predictive analytics can identify aspects of processes that correlate with superior outcomes. These insights are key to foster innovation and continuously hone processes, improve outcomes, and identify areas of further improvement thereby creating a virtuous cycle of continuous improvement.

Making Data Your Ally

The potential for precision medicine to improve outcomes while reducing cost is too great for healthcare groups to ignore. The government and corporate entities funding healthcare and the awakening giant of patient consumerism are escalating demands to accelerate this transformation. Last year, the White House committed the nation to a $215 million investment in precision medicine, and in his 2016 presidential address President Obama announced the Cancer Moonshot, a $1 billion government initiative to cure cancer. The investments and media attention are further driving public awareness and support, along with a cultural shift around data sharing.

Data sharing has always been a thorny problem for healthcare. Patients, hospitals, payers, and researchers — basically everyone — are wary of sharing data, but that cautiousness is decreasing given the increasingly obvious upside to embrace precision medicine.

At an event hosted by SAP and Bloomberg Government in April I served on a panel with Greg Simon, the Executive Director of the White House Cancer Task Force. “Every other part of our lives has been improved by computing and medicine is the last one to get involved,” said Simon. “Big Data is the key to try to find out how to treat people better, earlier, and more successfully to prevent, detect, and cure cancer.”

As healthcare IT prepares for the shift to precision medicine, they are experiencing a fundamental difference in expectations. In the past, it was okay to say data was siloed or was not structured in a way that enabled it to be used at the point-of-decision. In-memory computing capabilities now allow near real-time analysis of complex, disparate datasets in diverse geographic locations of diverse data types, including unstructured data. Now, the key question is, “If there is data out there that will reduce uncertainty and enable me to make a better decision for my patient, why can’t I leverage it at the point of decision?” The platforms that solve this problem and their adoption rates are on the rise (Figure 2).

About The Author

David P. Delaney, MD, is chief medical officer of SAP and head of the Americas healthcare team. He is a board certified critical care physician with 14 years of practice as an intensivist at Beth Israel Deaconess Medical Center, where he also held a faculty teaching appointment at Harvard Medical School.